**3D Shape Induction from 2D Views of Multiple Objects**

[Matheus Gadelha](http://mgadelha.me), [Subhransu Maji](http://people.cs.umass.edu/~smaji/) and [Rui Wang](https://people.cs.umass.edu/~ruiwang/)

_University of Massachusetts - Amherst_

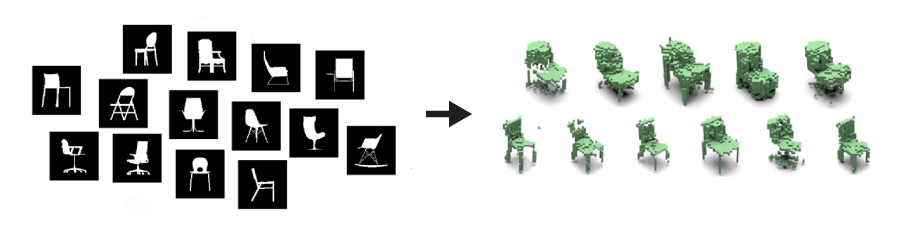

In this paper we investigate the problem of inducing a distribution over three-dimensional

structures given two-dimensional views of multiple objects taken from unknown viewpoints.

Our approach called "Projective Generative Adversarial Networks" (PrGANs) trains a deep generative

model of 3D shapes whose projections match the distributions of the input 2D views.

The addition of a projection module allows us to infer the underlying 3D shape distribution without

using any 3D, viewpoint information, or annotation during the learning phase.

We show that our approach produces 3D shapes of comparable quality to GANs trained on 3D data

for a number of shape categories including chairs, airplanes, and cars.

Experiments also show that the disentangled representation of 2D shapes into geometry and viewpoint

leads to a good generative model of 2D shapes.

The key advantage is that our model allows us to predict 3D, viewpoint, and generate novel views

from an input image in a completely unsupervised manner.

Paper

==========================================================================================

]](fig/paper_tb.png)

Generated Shapes

==========================================================================================

Shape Interpolation

==========================================================================================

Code

============================================================================================

[GitHub Link](https://github.com/matheusgadelha/PrGAN)